Prologue

Like many folks who began using Java around ten years ago, I found it very useful with two exceptions: it forced you to use objects for everything, and it was slow.

Since then, nothing much has changed about the object-oriented nature of Java, although some of the more annoying limitations of the early days have been more or less dealt with.

The more serious concern has generally been performance. Java was slow. The reason why isn’t exactly a mystery. Java programs compile to byte-code. C and C++ programs compile to native code. Whereas running a compiled C program is more or less a straightforward matter of executing one instruction after another, running a compiled Java program means running the Java byte code through an interpreter on the Java virtual machine. The interpreter has been made more clever over the years (it’s now a so-called Just-In-Time Compiler – JITC), but the basic principle remained. Java added another level of abstraction to running programs, and abstraction is not free.

A few years ago, I converted my favorite pi calculating benchmark to Java and ran it with the then current tools (JDK 1.3 if I recall correctly). It was slower than the native C version, but not enormously so.

Developments

Since then several things have changed. First, Microsoft’s .NET platform has become more than an odd curiosity. Like Java, .NET is basically a large runtime library packaged with a virtual machine (CLR) and its own bytecode format (called CIL). Interestingly, Microsoft encourages not only users of their C# Java clone, but also users of C/C++ to target this platform. In other words, your C++ programs can now also be compiled to run in a virtual machine.

Meanwhile, Sun (now Oracle) and all the C/C++ compiler projects have continued to work on improving performance. Intel in particular is on roll, seemingly adding a dozen new specialized instructions every year to their microprocessors, and then figuring out clever ways their compilers can take advantage of these. SSE2, SSE3, PNI, AVX, and whatnot.

The Test

The pi_css5 benchmark uses floating point arithmetic and fast-fourier transforms to efficiently compute large numbers of digits of pi. It makes no use of special object-oriented features, and was written originally in ANSI C. Happily, the code is portable and with a little massaging can be used with pretty much any C++, Java or .NET compiler. The actual test consists simply of running the program to compute 4 million digits of pi.

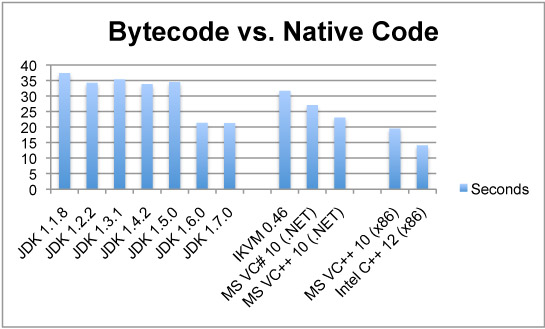

All measurements were performed on a 1.8GHZ Intel Core i5 processor running Windows XP. Measurements are of time to execute (lower is better).

Setup

The Java code was compiled with the earliest Java compiler I could find (JDK 1.1.8) and run under the various JVM versions. JDK 1.1 was released in early 2000. The current version as of late 2011 is JDK 1.7. Interestingly, using bytecode compiled with newer versions of the JDK made no difference in performance.

The C# code was compiled with Microsoft Visual C# 2010. This code was run in the current 4.0 release of the .NET environment.

The C++ code was compiled with Visual C++ 2010 and Intel C++ 12. As Visual C++ 2010 allows you to target both .NET (CLR) generating bytecode, or x86 directly by producing machine code, I tried both versions.

The Java code was also tested in the IKVM virtual machine. IKVM is a peculiar Java Virtual Machine which executes inside the .NET virtual machine. In other words, it translate Java bytecode to .NET bytecode aka CIL.

Observations

1) Java has made substantial performance improvements. Specifically, the JIT in JDK 1.6 and later is within spitting distance (10%) of the optimized x86 code produced by Microsoft’s Visual C++. Given that MSVC++ is probably the most widely used C++ compiler available, that’s quite an achievement.

2) There’s a penalty for using C# over C++ with .NET. This is surprising considering that the bytecode ought to look quite similar (floating point addition, multiplication and whatnot, combined with occasional calls to the math library).

3) The best native compiler (Intel C++) is beating the best JITC (JVM 1.7) by around 50%. That’s a sizable margin and more or less what I saw the last time I compared the two in late 2006. My suspicion is that the Intel compiler is generation FP code that takes advantage of newer processor features (SSE2 vectors and whatnot) whereas the JIT is stuck using generic 80-bit x87 FP arithmetic.

4) IKVM is sort of an odd case. It’s about 10% slower than C# on .NET. But considering that it’s working with two levels of abstraction instead of 1, that’s actually surprisingly good. In fact, it beats several of the older JVMs including 1.5.

Code

pi_css5_src.tgz Source code for Java, C# and C++ versions of the program.

Notes

1) JDK 1.1 and 1.2 by default don’t allocate sufficient memory to run pi_css5 for 4 million digits. To increase stack space, I used the -mx option. -mx131000000 (i.e. 128MB) did the trick.

2) MSVC++ 10 compiler was invoked with the /O2 /Ot /Oy /GL optimization flags for the native code (optimize for speed, omit frame pointer, perform whole program optimizations). For .NET, I used just /O2 (the others aren’t supported when targeting CLR).

3) Intel C++ 12 compiler was invoked with the /fast optimization flag. I wish every compiler had a -fast flag.