In spite of the improvements made to the Huffman program’s use of I/O functions in the previous post, it’s fairly clear that more can be done. In particular, looking at the profiles for encoding and decoding, the fgetc/fputc pair still account for a significant amount of the program’s runtime. While we succeeded in getting rid of them for reading and writing normal (unencoded) data, we still used them for the encoded data. It turns out that this isn’t too hard to fix either.

simplehuffman3.c implements this idea.

In the original scheme, the actual bits for the Huffman encoding of a given value were pushed onto a char variable. Each time 8 bits had been push on, we wrote the byte out to disk with fputc, zeroed it, and repeated the process. The same idea was used for reading encoded data (fgetc, then extract the 8 bits one at a time).

The simplest change we could make would be to simply use a type of variable that can hold more than 8 bits. An integer can hold 32 for example. Still, considering that the buffer used for unencoded data had a size of 1024, and that the fread/fwrite pair of functions are perfectly happy to write thousands of bytes at a time, it seems like we could do better.

The solution I ended up going with for the ‘bit-buffer’ is essentially an array of integers. Individual elements of the bit-buffer, lets call them blocks, are filled using the same procedure as before, only now this time we have an additional variable keeping track of which block we’re currently storing bits at. When a block fills (we’ve pushed in 32 bits worth of encoding data), we move forward to the next one. Only when we’ve entirely filled the buffer does any I/O activity occur. Again, an analogous process is used for reading encoded data.

The code changes aren’t quite as restricted as they were in the original example, but they’re still confined mainly to just 2 functions – ReadBit and WriteBits.

Here are the profiles again (gathered by oprofile):

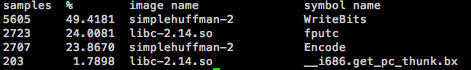

Encoding, before changes:

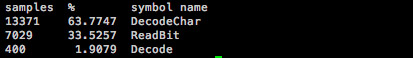

Encoding, after changes:

![]()

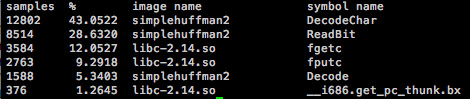

Decoding, before changes:

Decoding, after changes:

Just as expected, the single-byte I/O functions are gone from the profile, and the many byte I/O functions are to insignificant to be shown.

What does this mean in terms of runtime? Well, for encoding, things aren’t that different – the new version is perhaps 10% faster. But for decoding, the difference is enormous – the newer version is nearly a factor of 2 faster.

In short, when you’re dealing with a program that does a lot of I/O, it makes good sense to think of how that I/O is being done!