I’ve been moving around a lot of data lately, particularly over the network, so it seemed like a good idea to settle on a compression regimen. Networks are fast and all, especially at school, but moving multiple gigabytes of data still doesn’t happen instantly. So I did a comparison of the current mainstream compression programs on Linux. The system had a fast SSD drive, so operations were mainly CPU bound.

The contenders

- bzip2 – a fairly popular replacement for gzip, though generally believed to be slower for archiving and unarchiving.

- compress – interesting for historical purposes and accessing old archives, but no longer really used otherwise.

- gzip – intended as a free compress replacement, it’s still the most commonly used UNIX compression tool.

- lzip – an FSF-endorsed LZMA-based encoder claiming higher efficiency than more common tools.

- lzop – uses a similar algorithm to gzip, but claims to be much faster and so particularly useful for large data files.

- xz – an LZMA-based encoder claiming high efficiency and speed.

- zip – still the de-facto standard on Windows, but not particularly popular on Linux.

The Test

To compare, I compressed and decompressed a 220MB tar archive, containing a distribution of the clang C/C++ compiler. For all program other than compress which only has one setting, I tried the minimum compression setting (-1), the maximum compression setting (-9) and the default setting (no option).

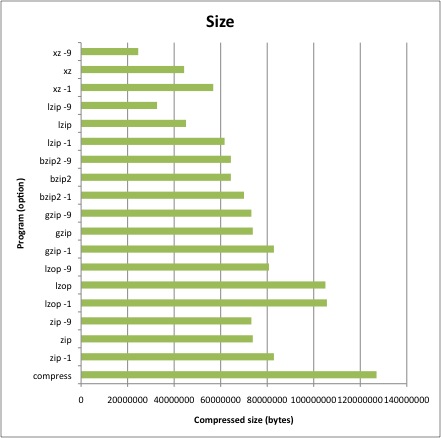

Efficiency

Clearly xz did the best job at compressing the file overall, with lzip slightly behind. At the other end, compress was the least effective, and lzop wasn’t particularly effective either.

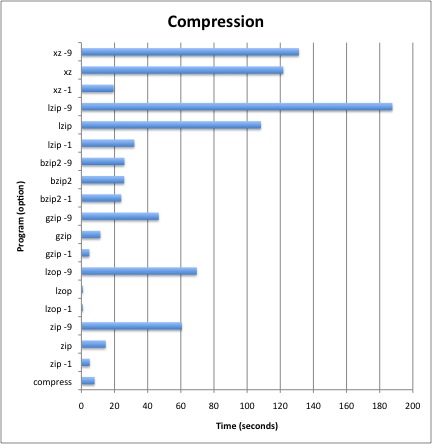

Compression Speed

xz and lzip are certainly the slowest of the bunch, particularly above the -1 setting.

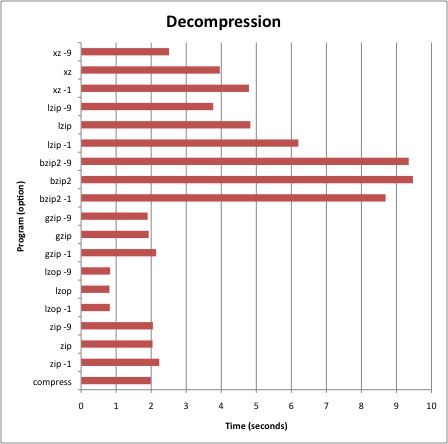

Decompression Speed

Interestingly, there is much less variation in decompression speed, with the obvious exception of bzip2. lzip and xz are also fairly slow. As advertised, lzop is the fastest, by a good margin.

Conclusions

This is only a single dataset, so results may well vary. Still a few points stick out:

- compress only makes sense for getting at old data. It’s simply too slow and inefficient to use compared to the newer programs.

- bzip2 with any compression level has no advantage over xz -1. xz -1 is faster to compress and decompress, and produces smaller files.

- gzip is a better zip. The differences aren’t huge, but gzip is almost always a bit faster and a bit more efficient than zip at the same compression level. Of course zip can interchange with WIndows users seamlessly, and can compress directories as well as files.

- xz is a better alternative to lzip. With one slight exception, xz was faster and more efficient than lzip with comparable options.

- lzop is fast enough that for very large datasets, it might make sense over the others.

My take-away is that for archiving and transferring large files over the network, xz -9 makes sense. For casually sending files, I’ll stick with zip as it’s fairly fast and by far the most compatible.